The transition to Network Function Virtualization (NFV) and Software Defined Networking (SDN) represents the most transformative architectural network trend in the last 20 years. Because NFV and SDN promise system openness and network neutrality, it is expected to have a profound impact on the formation of future communication networks and services.

Our Ethernity Networks company is leveraging Xilinx devices to deliver the industry's truly open, highly programmable SDN and NFV solutions. Let's take a look at how Emynity built its solution after exploring the prospects and requirements for NFV and SDN for the first time.

Hardware and NFV/SDN revolutions are everywhere

The network infrastructure business of the past few decades is largely a continuation of the mainframe business model, in which a handful of large companies provide proprietary central office infrastructure, and these devices operate again. Proprietary software, all built in such a way as to not communicate with competitors' systems. In most cases, infrastructure vendors create custom hardware for each of their customers' network nodes and build each node into a minimum programmability and upgradeability node to ensure that they want to scale or upgrade the network. Customers will buy next-generation devices from the same vendor; or they will not be able to choose a new network from other companies, and they will only repeat the same mistakes.

Over the past five years, newcomers from operators, academic institutions, and vendors have been calling for the transition to ubiquitous hardware, network neutrality, open systems, and software compatibility by maximizing the programmability of hardware and software. NFV and SDN are pioneers in this trend, raising the banner for this growing and sure-to-successful revolution.

With NFV, companies can run a variety of network functions on a common commercial hardware platform through software, which is quite different from running each specific network task on expensive custom proprietary hardware. Maximizing the programmability of these ubiquitous open platforms enables companies to run many of the tasks previously performed by specific hardware devices in even smaller network nodes in the data center. NFV allows operators to upload only new network software for a given service to commercial hardware resources as needed, further reducing the time required to create new network services. Operators can therefore easily scale their networks and choose best-of-breed features for their company without having to buy and use new proprietary hardware with limited software flexibility.

NFV is effective because many nodes in the network have general functional requirements. Nodes with general requirements include switches and routers, millions of traffic classifications, access control lists (ACLs), state flow awareness, deep packet inspection (DPI), tunnel gateways, traffic analysis, performance monitoring, segmentation, security , virtual routers and switches. But NFV itself faces many challenges. Internet and data center traffic is expected to grow exponentially in the next few years, so network infrastructure must be able to cope with the huge increase in traffic. Relying solely on software programmability is not enough to enable general purpose hardware to scale easily as bandwidth requirements grow. Ubiquitous hardware needs to be reprogrammed to optimize overall system performance. This allows vendors and operators to take advantage of NFV and SDV in a "smarter, not harder" way, from meeting the ever-increasing network requirements of the operator's end customers (ie consumers). The true software/hardware programmable infrastructure is the only way to truly realize the vision of NFV and SDN.

SDN uses a standards-based software abstraction between the network control layer and the underlying data forwarding layer in physical and virtual devices. This eliminates the complex and static nature of traditional distributed network infrastructure and is a modern networking method. In the past five years, the industry has developed a standard data layer abstraction protocol, OpenFlow, which provides a novel and practical way to configure a network architecture with a centralized software-based controller.

The open SDN platform with centralized software configuration dramatically increases network agility through programmability and automation while dramatically reducing network operating costs. Industry-standard data layer abstraction protocols such as OpenFlow allow providers to freely use any type and brand of data layer devices because all underlying network hardware can be addressed through a common abstraction protocol. Importantly, OpenFlow makes it easy to use “bare-metal switches†while avoiding the burden of traditional vendors, giving operators the freedom to choose networks that are now easily found in other areas of the IT infrastructure, such as servers.

As SDN is still in its infancy, standards are still evolving. This means that equipment vendors and operators need to guard against risks and use the hardware and software programmability of FPGAs to design and operate SDN equipment with maximum flexibility. The FPGA-based SDN devices currently on the market are quite affordable even for large-scale deployments. FPGA-based SDN devices have a high degree of hardware and software flexibility and are as compliant with OpenFlow protocol requirements.

Performance acceleration requirements

Perhaps the most critical requirement for NFV and SDN to go beyond openness is high performance. While NFV hardware appears to be cheaper than proprietary systems, the NFV architecture needs to maintain a highly competitive amount of large data to meet the complex processing requirements of next-generation networks and to increase energy efficiency.

In fact, the NFV Infrastructure Group Specification (Infrastructure Group SpecificaTIon) contains a special section that describes the need for acceleration capabilities to improve network performance. This specification describes how processor components transfer certain functions to a network interface card (NIC) to support certain acceleration features, including TCP segmentation, Internet Protocol (IP) fragmentation, DPI, filtering of millions of entries, encryption, performance. Monitoring/counting, protocol interworking and OAM, and other acceleration features.

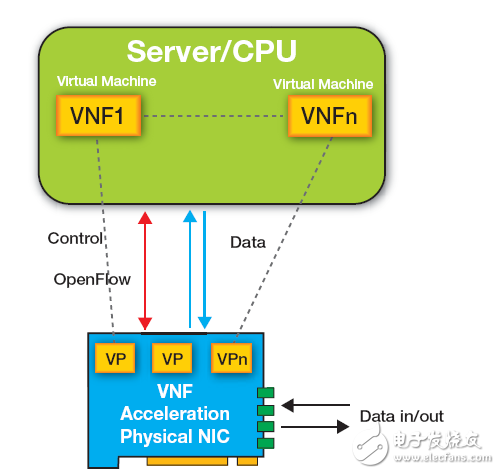

The primary engine that drives this acceleration is the NIC, which is equipped with a physical Ethernet interface for the server to connect to the network. As shown in Figure 1, when a packet arrives at the NIC through a 10GE, 40GE, or 100GE port, the packet is placed on a virtual port (VP) or on behalf of a specific virtual machine based on tag information (such as IP, MAC, or VLAN). In the queue of VM). The appropriate virtual machine located on the server then directly DMAs the packet for processing. Each virtual network function (VNF) runs on a different virtual machine, and some network functions require the use of multiple or even dozens of virtual machines.

OpenFlow control hardware acceleration functions, such as hardware acceleration on the NIC, can be considered as an extension of the SDN switch. NFV performance can be handled for a wide range of functions by deploying multiple VNFs across multiple virtual machines and/or cores. This brings two major performance challenges to NFV. The first challenge is the "vSwitch," which is typically a piece of software that handles network traffic between the Ethernet NIC and the virtual machine. The second performance challenge is to balance 40/100GE data entered between multiple VMs. When adding IP fragmentation, TCP segmentation, encryption, or other specialized hardware features, NFV software needs to be assisted to meet performance requirements and reduce power consumption. Ideally, the shape should be compact to reduce the board space required to store network equipment.

To handle NFV challenges and various network functions, NIC cards for NFV and SDN must be extremely high performance and as flexible as possible. A number of chip vendors are trying to be the first to market NFV hardware vendors, and they have proposed to build platforms for NIC cards, each with varying degrees of programmability. Intel is currently the leading provider of NIC components with DPDK packages for accelerated packet processing. EZchip provides an NPS multi-threaded CPU running Linux and programmed in C. Marvell offers two complete data layer software suites for its Xelerated processors for Metro Ethernet and Unified Fiber Access ApplicaTIon, which are powered by application packages running on the NPU. Composed of a control layer API running on the host CPU. Cavium has selected a more versatile software development kit for its Octeon product line. Broadcom, Intel, and Marvel L2/L3 switches are primarily used for search and vSwitch load shedding. At the same time, Netronome's new Flow-NIC is equipped with software that runs on the company's dedicated network processor hardware.

Although all of these products claim to be open NFV methods, they are not. All of these approaches involve harsh and arguably over-restricted hardware implementations that are only software programmable and rely again on demanding and proprietary hardware implementations for SoC or standard processors.

ALL PROGRAMMABLE ETHERNITY NIC for NFV performance acceleration

To enhance programmability while dramatically improving performance, many companies are investigating a combination of existing CPUs and FPGAs. In the past two years, many data center operators (especially Microsoft) have published papers on the performance improvements they have achieved through hybrid architectures. A white paper called "Catapult Project" from Microsoft pointed out that performance has increased by 95% with only a 10% increase in power consumption. Intel pointed out that the combination of FPGA and CPU in data center NICs is the main reason for its $16.7 billion acquisition of Altera, the second-largest FPGA vendor.

Similarly, the CPU and FPGA combination approach is also applicable to NFVs that run virtual networking functions on virtual machines. In this approach, the FPGA acts as a fully programmable NIC that can be extended to accelerate virtual network functions running on the server's CPU/VM.

But a fully FPGA-based NIC card is the ideal COTS hardware architecture for NFV. Firmware is available from multiple FPGA firmware vendors to improve the performance of NFV running on the FPGA NIC. And FPGA companies have recently developed C language compiler technologies (such as Xilinx's SDAccelTM and SDSoCTM development environments) to enable OpenCLTM and C++ design input and programming acceleration to further extend NFV device design to more users.

To accelerate NFV performance, NFV solution providers have increased the number of VMs in order to distribute VNFs across multiple VMs. When operating multiple VMs, new challenges arise, which is related to balancing the traffic load between virtual machines and supporting IP fragmentation. In addition, there are challenges in supporting the exchange between VMs and the exchange between VMs and NICs. Software-only vSwitch components do not have enough performance to address these challenges. In addition, the integrity of the VM must be maintained so that the VM can properly store specific bursts of data and not deliver the packets out of order.

Ethernity's ENET FPGAs are designed to address NFV performance issues and are equipped with a virtual switch/router implementation that enables the system to accelerate the vSwitch's ability to exchange data based on L2, L3, and L4 tags while maintaining a dedicated virtuality for each VM. port. If a particular VM is not available, ENET can save traffic for 100ms; once available, ENET will transfer the data to the VM via DMA. Our ENET has a standard CFM packet generator and packet analyzer installed to provide latency measurement to measure VM availability and health and to indicate ENET's state load balancer (for each VM in load distribution) Aspect availability). The packet reordering engine can maintain the order of the frames in some cases, for example, if a packet moves out of order, which can result in the use of multiple VMs for a single function.

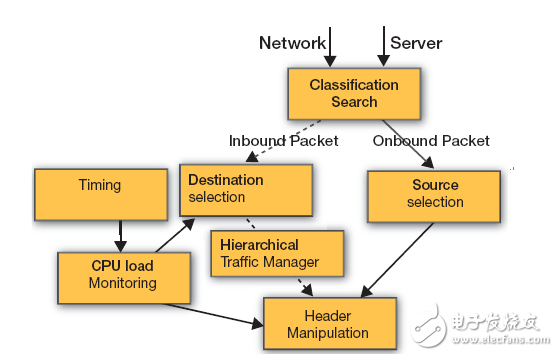

In the classification module, we configured a balanced hash algorithm based on the L2, L3, and L4 fields. This algorithm includes segmentation so that the load balancer can perform equalization based on inner tunnel information (such as VXLAN or NVGRE), while IP slice connections can be handled by a specific connection/CPU. For VM-to-VM connections, the classifier and search engine forwards the session to the target VM instead of the vSwitch software. At the same time, the classifier function assigns a header manipulation rule to each input stream based on its router output, while monitoring the modified IP address or offload protocol.

For each new stream, the load balancer of the target selection module assigns a target address from the available VMs according to Weighted Round Robin Scheduling (WRR) technology. WRR is configured based on information derived from the VM load monitoring module.

The Tiered Traffic Manager module implements a layered WRR between available VMs and maintains an output virtual port for each VM to be divided into three scheduling levels based on priority, VM, and physical port. The CPU tier represents a particular VM, while the priority tier may allocate weights between different services/streams that serve a particular VM. By operating external DDR3, ENET can support 100ms of cache to overcome the transient load of a particular VM.

VM load monitoring uses the ENET programmable packet generator and packet analyzer to monitor Carrier Ethernet services in compliance with the Y.1731 and 802.1ag standards. The VM load monitoring module maintains information about each CPU/VM availability and uses metrics such as Ethernet CFM Delay Measurement Message (DMM) protocol generation for the VM. By time stamping each packet and measuring the time difference between transmission and reception, the module can determine the availability of each VM and, based on this, indicate the target selection module on the available VM.

What the resource selection module determines is which outgoing traffic sent from the host to the user will be classified and the resources of the data packet determined. The header manipulation module in ENET performs Network Address Translation (NAT) to replace the input address with the correct VM IP address, enabling the NIC to forward streams, packets or services to the correct VM. For output traffic, NAT will do the opposite and send the packet along with its original IP address to the user. In addition, the header manipulation module will also perform tunnel encapsulation. At this point, the header manipulation module will perform the operation rules assigned by the classifier through the classification and will strip the tunnel header or other headers between CPU operations. In the opposite direction, it appends the original tunnel to the output user port.

As the number of operators' network users increases, the size of the flow table may increase rapidly beyond the standard server's cache capacity. This is especially true for current OpenFlow systems because the current OpenFlow system requires 40 different fields, IPv6 addresses, Multi-Protocol Label Switching (MPLS), and Provider Backbone Bridge (PBB). ENET search engines and packet parsers can support the classification of multiple fields and provide millions of streams, thus offloading classification and search functionality from software devices.

Finally, through the ENET packet header manipulation engine, ENET can offload any protocol processing and segmentation for VMs and TCP or between various protocols (including 3GPP, XVLAN, MPLS, PBB for virtual EPC (vEPC) implementations) , NAT/PAT, etc.) provide raw data information.

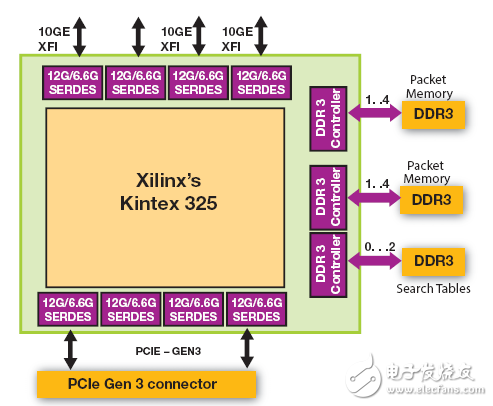

In addition to the firmware, Ethernity also developed what we call the NFV NIC (Figure 3). To create a NIC, we deployed ENET SoC firmware (which has been deployed in hundreds of thousands of systems in a carrier Ethernet network) into a single Xilinx Kintex®-7 FPGA. We also integrate the functionality of five discrete components into the same FPGA. NIC and SR-IOV support; network processing (including classification, load balancing, packet modification, switching, routing, and OAM); 100ms buffering; frame segmentation; encryption.

The ACE-NIC is a hardware accelerated NIC that supports OpenFlow and runs on a COTS server. ACE-NIC improves the performance of the vEPC and vCPE NFV platforms by a factor of 50, greatly reducing the end-to-end latency associated with the NFV platform. The new ACE-NIC is equipped with four 10GE ports and software and hardware designed for the Ethernity ENET Stream Processor-based FPGA SoC, supporting PCIe® Gen3. The ACE-NIC is also equipped with onboard DDR3 connected to the FPGA SoC, supports 100ms buffering and can search for one million entries.

The Ethernity ENET Stream Processor SoC platform uses a patented, unique stream-based processing engine to handle any data unit of variable size, providing multi-protocol networking, traffic management, switching, routing, segmentation, time stamping, and network processing. This platform supports speeds up to 80Gbps on Xilinx 28nm Kintex-7XC7K325T FPGAs or higher speeds on larger FPGAs.

The ACE-NIC comes with basic features such as frame-by-frame timestamp, packet generator, packet analyzer, 100ms buffering, frame filtering, and load balancing between VMs within nanosecond precision. To provide multiple cloud devices, it is also capable of allocating virtual ports by virtual machine.

In addition, the ACE-NIC comes with dedicated acceleration for NFV vEPC. These features include frame header manipulation and offloading, 16K virtual port switching implementations, programmable frame segmentation, QoS, counters, and accounting information, which can be controlled by OpenFlow for vEPC. With its unique software and hardware design, ACE-NIC improves software performance by a factor of 50.

ALL PROGRAMMABLE ETHERNITY SDN switch

Similarly, Ethernity integrates ENET SoC firmware into the FPGA to create a full ALL PROGRAMMABLE SDN switch and supports OpenFlow version 1.4 and a full suite of Carrier Ethernet switches to accelerate the time-to-market for white-box SDN switch deployments.

ENET SoC Carrier Ethernet Switches are MEF-compliant L2, L3, and L4 switches/routers that can exchange and route five levels of packet headers between 16,000 internal virtual ports distributed over more than 128 physical channels frame. It supports FE, GbE, and 10GbE Ethernet ports as well as four levels of traffic management scheduling. Because ENET's intrinsic architecture supports segmented frames, ENET can perform IP fragmentation and functional reordering with zero-copy technology, which eliminates the need for dedicated storage and forwarding for segmentation and reassembly. In addition, ENET has an integrated programmable packet generator and packet analyzer that simplifies CFM/OAM operation. Ultimately, ENET can operate in 3GPP, LTE, mobile backhaul and broadband access. It supports interworking between multiple protocols, which only require zero-copy operations and does not require rerouting frames for header manipulation.

Clearly, the communications industry is at the beginning of a new era. We are sure that we can see a lot of innovation in the NFV and SDN areas. Any emerging solution for NFV performance enhancements or SDN switches must be able to support the new SDN. As Intel acquires Altera and the hardware architecture for higher programmability continues to grow, we are convinced that the combined architecture of processors and FPGAs will be more and more, and there will be new and innovative ways to achieve NFV performance improvements.

FPGA-based NFV NIC acceleration can provide NFV flexibility based on a general purpose processor while providing the necessary throughput that GPP cannot maintain, and also performing specific network function accelerations that GPP cannot support. By effectively combining SDN and NFV on an FPGA platform, we can design an All Programmable network device to drive new IP vendor ecosystem innovations in network applications.

Figure 1 – When a packet arrives, the NIC enters a virtual port (VP) that represents a particular virtual machine. The packet is then sent via DMA to the appropriate virtual machine on the server for processing.

Figure 2 - This high-level block diagram shows the load balancing and switches for a virtual machine.

Figure 3 – The Xilinx Kintex FPGA is located in the center of the Ethernity NFV NIC.

For Led Lighting System,Specifically for led downlight,outside Constant Current Led Driver, Plastic casing, AC100-277V input, 27-40/42V output,with the dimming function of triac-dimming/0-10V/PWM/RX. Flicker free and SELV Safety output design, UL/FCC/TUV/RCM/CB/CE Certified.

Parameter:

Input voltage:100-130V/100-277V/100-240V/180-240V

Output voltage:25-40V/27-42V

Current:250mA, 300mA, 350mA

Power factor: >0.9

Dimming:0-10V / PWM / RX / Traic

>=50000hours, 3-5 years warranty.

FAQ:

Question 1:Are you a factory or a trading company?

Answer: We are a factory.

Question 2: Payment term?

Answer: 30% TT deposit + 70% TT before shipment,50% TT deposit + 50% LC balance, Flexible payment

can be negotiated.

Question 3: What's the main business of Fahold?

Answer: Fahold focused on LED controllers and dimmers from 2010. We have 28 engineers who dedicated themselves to researching and developing LED controlling and dimming system.

Question 4: What Fahold will do if we have problems after receiving your products?

Answer: Our products have been strictly inspected before shipping. Once you receive the products you are not satisfied, please feel free to contact us in time, we will do our best to solve any of your problems with our good after-sale service.

Led Downlights Driver,350Ma Led Driver,Led Lighting System

ShenZhen Fahold Electronic Limited , https://www.fahold.com