In the rapid development of artificial intelligence, speech recognition has become the standard of many devices. Speech recognition has begun to attract more and more people's attention. Foreign Microsoft, Apple, Google, Nuance, domestic Keda Xunfei, Spirit and other manufacturers Both are developing new strategies for speech recognition and new strategies. It seems that the natural interaction between humans and speech is gradually approaching.

We all want smart and advanced voice assistants like Iron Man to let them understand what you are talking about when communicating with robots. Speech recognition technology has turned the once-dream of mankind into reality. Speech recognition is like a "machine's auditory system," which allows a machine to transform a speech signal into a corresponding text or command by recognizing and understanding.

Speech recognition technology, also known as Automated Speech Recognition AutomaTIc Speech RecogniTIon (ASR), aims to convert vocabulary content in human speech into computer readable input such as buttons, binary codes or sequences of characters. Unlike speaker recognition and speaker confirmation, the latter attempts to identify or confirm the speaker who made the speech rather than the vocabulary content contained therein. Below we will explain in detail the principle of speech recognition technology.

One: Principles of Speech Recognition Technology - The Basic Unit of Speech Recognition SystemSpeech recognition is based on speech. Through speech signal processing and pattern recognition, the machine automatically recognizes and understands the spoken language of human beings. Speech recognition technology is a high-tech technique that allows a machine to transform a speech signal into a corresponding text or command through a recognition and understanding process. Speech recognition is a cross-disciplinary subject with a wide range of disciplines. It is closely related to the disciplines of acoustics, phonetics, linguistics, information theory, pattern recognition theory, and neurobiology. Speech recognition technology is gradually becoming a key technology in computer information processing technology. The application of voice technology has become a competitive emerging high-tech industry.

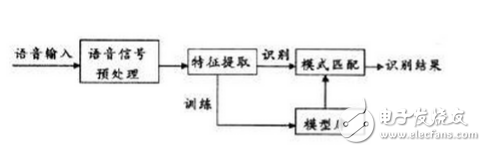

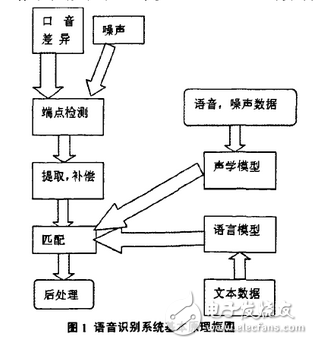

The speech recognition system is essentially a pattern recognition system, including three basic units of feature extraction, pattern matching, and reference pattern library. Its basic structure is shown in the following figure:

The unknown voice is converted into an electrical signal by the microphone and then added to the input end of the recognition system. First, the pre-processing is performed, then the speech model is established according to the characteristics of the human voice, the input speech signal is analyzed, and the required features are extracted. Create a template for speech recognition. In the process of recognition, the computer compares the voice template stored in the computer with the characteristics of the input voice signal according to the model of speech recognition, and finds a series of optimal matching with the input voice according to a certain search and matching strategy. template. Then according to the definition of this template, the recognition result of the computer can be given by looking up the table. Obviously, this optimal result has a direct relationship with the choice of features, the quality of the speech model, and the accuracy of the template.

The speech recognition system construction process as a whole includes two major parts: training and recognition. The training is usually done offline, and the signal processing and knowledge mining of the pre-collected massive speech and language databases are performed to obtain the “acoustic model†and “language model†required by the speech recognition system; the identification process is usually completed online. Automatic recognition of the user's real-time voice. The identification process can usually be divided into two modules: “front end†and “back endâ€: the main function of the “front end†module is to perform endpoint detection (remove unnecessary mute and non-speech), noise reduction, feature extraction, etc.; The function of the "end" module is to use the trained "acoustic model" and "language model" to perform statistical pattern recognition (also called "decoding") on the feature vector of the user's speech, and obtain the text information contained therein. In addition, the back-end module also There is an “adaptive†feedback module that can self-learn the user's voice to perform the necessary “correction†on the “acoustic model†and “speech model†to further improve the accuracy of recognition.

Speech recognition is a branch of pattern recognition, and it belongs to the field of signal processing science. It also has a very close relationship with the disciplines of phonetics, linguistics, mathematical statistics and neurobiology. The purpose of speech recognition is to let the machine "understand" the spoken language of human beings, including two aspects: one is to literally understand the non-translated into written language; the other is to include the requirements or inquiries contained in the spoken language. Understand, make the right response, and not stick to the correct conversion of all words.

The automatic speech recognition technology has three basic principles: first, the language information in the speech signal is encoded according to the time variation pattern of the short-term amplitude spectrum; the second speech is readable, that is, its acoustic signal can be ignored regardless of what the speaker is trying to convey. In the case of information content, it is represented by dozens of distinctive and discrete symbols; the third voice interaction is a cognitive process and thus cannot be separated from the grammatical, semantic and pragmatic structures of the language.

Acoustic model

The model of the speech recognition system usually consists of two parts: the acoustic model and the language model, which correspond to the calculation of the speech-to-syllable probability and the calculation of the syllable-to-word probability. Acoustic modeling

search for

The search in continuous speech recognition is to find a sequence of word models to describe the input speech signal, thereby obtaining a sequence of word decoding. The search is based on scoring the acoustic model scores in the formula and the language model. In actual use, it is often necessary to add a high weight to the language model based on experience and set a long word penalty score.

System implementation

The requirement for the speech recognition system to select the recognition primitive is that it has an accurate definition and can obtain sufficient data for training, which is general. English usually uses context-sensitive phoneme modeling. The collaborative pronunciation of Chinese is not as serious as English, and syllable modeling can be used. The size of the training data required by the system is related to the complexity of the model. The model is designed to be too complex to exceed the training data provided, resulting in a sharp drop in performance.

Dictation: Large vocabulary, non-specific people, continuous speech recognition systems are often referred to as dictation machines. Its architecture is the HMM topology based on the aforementioned acoustic model and language model. During the training, the model parameters are obtained by using the forward-backward algorithm for each primitive. When identifying, the primitives are concatenated into words, and the silent model is added between the words and the language model is introduced as the transition probability between words to form a cyclic structure. The algorithm performs decoding. In order to improve the efficiency of Chinese, it is a simplified method to improve efficiency by first segmenting and then decoding each segment.

Dialogue system: The system used to implement human-machine oral dialogue is called the dialogue system. Limited by current technology, the dialogue system is often oriented to a narrow field, limited vocabulary system, its subject matter is tourism inquiry, booking, database retrieval and so on. The front end is a speech recognizer that identifies the generated N-best candidate or word candidate grid, which is analyzed by the parser to obtain semantic information, and then the dialog manager determines the response information, which is output by the speech synthesizer. Since current systems tend to have a limited vocabulary, semantic information can also be obtained by extracting keywords.

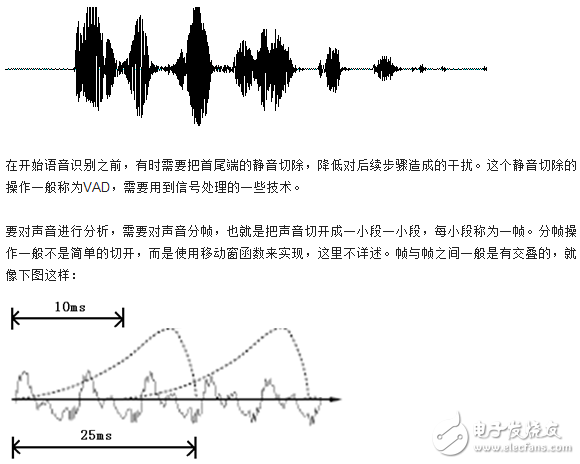

Two: Principles of Speech Recognition Technology - Interpretation of Working PrinciplesFirst, we know that sound is actually a wave. Common mp3 formats are compressed and must be converted to uncompressed pure waveform files, such as Windows PCM files, also known as wav files. In addition to a file header stored in the wav file, it is a point of the sound waveform. The figure below is an example of a waveform.

In the figure, each frame has a length of 25 milliseconds with an overlap of 25-10 = 15 milliseconds between every two frames. We call it a frame length of 25ms and a frame shift of 10ms.

After framing, the voice becomes a lot of small segments. However, the waveform has almost no descriptive power in the time domain, so the waveform must be transformed. A common transformation method is to extract the MFCC features, and according to the physiological characteristics of the human ear, turn each frame waveform into a multi-dimensional vector, which can be simply understood as the vector containing the content information of the frame speech. This process is called acoustic feature extraction. In practical applications, there are many details in this step. The acoustic characteristics are not limited to MFCC, which is not mentioned here.

At this point, the sound becomes a 12-line (assuming the acoustic feature is 12-dimensional), a matrix of N columns, called the observation sequence, where N is the total number of frames. The observation sequence is shown in the figure below. In the figure, each frame is represented by a 12-dimensional vector. The color depth of the color block indicates the size of the vector value.

Next, we will introduce how to turn this matrix into text. First introduce two concepts:

Phoneme: The pronunciation of a word consists of phonemes. For English, a commonly used phoneme set is a set of 39 phonemes from Carnegie Mellon University, see The CMU Pronouncing DicTIonary. Chinese generally uses all initials and finals as phoneme sets. In addition, Chinese recognition is also divided into a tonelessness, which is not detailed.

Status: This is understood to be a more detailed phone unit than a phoneme. Usually a phoneme is divided into 3 states.

How does speech recognition work? In fact, it is not mysterious at all, nothing more than:

The first step is to identify the frame as a state (difficult).

The second step is to combine the states into phonemes.

The third step is to combine the phonemes into words.

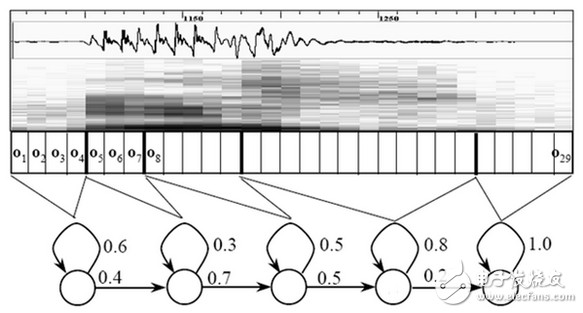

As shown below:

In the figure, each small vertical bar represents one frame, and several frame speeches correspond to one state, and each three states are combined into one phoneme, and several phonemes are combined into one word. In other words, as long as you know which state each voice corresponds to, the result of voice recognition comes out.

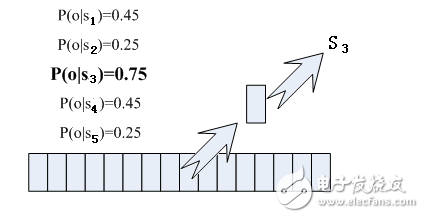

Which state does each phoneme correspond to? There is an easy way to think about which state has the highest probability of a frame, and which state the frame belongs to. For example, in the following diagram, the conditional probability of this frame is the largest in state S3, so it is guessed that this frame belongs to state S3.

Where do the probabilities used are read from? There is a thing called "acoustic model", which contains a lot of parameters, through which you can know the probability of the frame and state. The method of obtaining this large number of parameters is called "training", and a huge amount of voice data is needed. The training method is cumbersome, and is not mentioned here.

But there is a problem with this: each frame will get a status number, and finally the entire voice will get a bunch of state numbers, the status numbers between the two adjacent frames are basically the same. Suppose the voice has 1000 frames, each frame corresponds to 1 state, and every 3 states are combined into one phoneme, then it will probably be combined into 300 phonemes, but this voice does not have so many phonemes at all. If you do this, the resulting status numbers may not be combined into phonemes at all. In fact, the state of adjacent frames should be mostly the same, because each frame is short.

A common way to solve this problem is to use the Hidden Markov Model (HMM). This thing sounds like a very deep look, in fact it is very simple to use:

The first step is to build a state network.

The second step is to find the path that best matches the sound from the state network.

This limits the results to a pre-set network, avoiding the problems just mentioned, and of course, it also brings a limitation. For example, the network you set only contains "days sunny" and "today rain". The state path of the sentence, then no matter what you say, the result of the recognition must be one of the two sentences.

Then what if you want to recognize any text? Make this network big enough to include any text path. But the bigger the network, the harder it is to achieve better recognition accuracy. Therefore, according to the needs of the actual task, the network size and structure should be reasonably selected.

Building a state network is a word-level network that is developed into a phoneme network and then expanded into a state network. The speech recognition process is actually searching for an optimal path in the state network. The probability that the voice corresponds to this path is the largest. This is called “decodingâ€. The path search algorithm is a dynamic plan pruning algorithm called Viterbi algorithm for finding the global optimal path.

The cumulative probability mentioned here consists of three parts, namely:

Probability of observation: probability of each frame and each state

Transition Probability: The probability that each state will transition to itself or to the next state

Language probability: probability obtained according to the law of linguistic statistics

Among them, the first two probabilities are obtained from the acoustic model, and the last probability is obtained from the language model. The language model is trained using a large amount of text, and can use the statistical laws of a language itself to help improve the recognition accuracy. The language model is very important. If you don't use the language model, when the state network is large, the results are basically a mess.

In this way, basically the speech recognition process is completed, which is the principle of speech recognition technology.

Three: Principles of Speech Recognition Technology - Workflow of Speech Recognition System In general, a complete speech recognition system is divided into 7 steps:

1 Analyze and process the speech signal to remove redundant information.

2 Extract key information that affects speech recognition and feature information that expresses the meaning of the language.

3 Close the feature information and identify the word with the smallest unit.

4 Identify words in order according to their respective grammars.

5 The meaning of the front and back is used as an auxiliary recognition condition, which is conducive to analysis and identification.

6 According to the semantic analysis, the key information is divided into paragraphs, the recognized words are taken out and connected, and the sentence composition is adjusted according to the meaning of the sentence.

7 Combine semantics, carefully analyze the interrelationship of contexts, and make appropriate corrections to the statements currently being processed.

Basic block diagram of tone recognition system

The basic principle structure of the speech recognition system is shown in the figure. The principle of speech recognition has three points: 1 the encoding of the language information in the speech signal is performed according to the time variation of the amplitude spectrum; 2 because the speech is readable, that is, the acoustic signal can be ignored without considering the information content conveyed by the speaker. Under the premise, it is represented by multiple distinctive and discrete symbols; 3 the interaction of speech is a cognitive process, so it must not be separated from the grammar, semantics and terminology.

Pre-processing, which includes sampling the speech signal, overcoming the aliasing filtering, removing some of the differences in the pronunciation of the individual and the noise caused by the environment, in addition to the selection of the speech recognition basic unit and the endpoint detection problem. Repeated training is to remove the redundant information from the original speech signal samples by allowing the speaker to repeat the speech multiple times before the recognition, retain the key information, and then sort the data according to certain rules to form a pattern library. Furthermore, pattern matching is the core part of the entire speech recognition system. It is based on certain rules and the similarity between the input characteristics and the inventory model, and then the meaning of the input speech.

The front-end processing first processes the original speech signal and then extracts the features to eliminate the influence of noise and different speaker's pronunciation differences, so that the processed signal can more fully reflect the essential feature extraction of the speech, eliminating noise and different The influence of the speaker's pronunciation difference makes the processed signal more fully reflect the essential features of the voice.

Four: Principles of Speech Recognition Technology - Development HistoryBefore the invention of the computer, the idea of ​​automatic speech recognition has been put on the agenda, and the early vocoder can be regarded as the prototype of speech recognition and synthesis. The "Radio Rex" toy dog ​​produced in the 1920s was probably the earliest speech recognizer. When the dog's name was called, it could be ejected from the base. The earliest computer-based speech recognition system was the Audrey speech recognition system developed by AT&T Bell Labs, which recognizes 10 English digits. Its identification method is to track the formants in the speech. The system received a 98% correct rate. By the end of the 1950s, Denes of the Colledge of London had added grammatical probabilities to speech recognition.

In the 1960s, artificial neural networks were introduced into speech recognition. Two major breakthroughs in this era were linear predictive coding Linear PredicTIve Coding (LPC), and dynamic time warping Dynamic Time Warp technology.

The most significant breakthrough in speech recognition technology is the application of the hidden Markov model Hidden Markov Model. From Baum, the related mathematical reasoning was proposed. After research by Labiner et al., Kai-fu Lee of Carnegie Mellon University finally realized the first large vocabulary speech recognition system Sphinx based on hidden Markov model. Strictly speaking, speech recognition technology has not left the HMM framework.

A huge breakthrough in laboratory speech recognition research came out in the late 1980s: people finally broke through the three major obstacles of large vocabulary, continuous speech and non-specific people in the laboratory. For the first time, these three features were integrated into one. In the system, the typical Sphinx system of Carnegie Mellon University is the first high-performance non-specific, large vocabulary continuous speech recognition system.

During this period, speech recognition research has gone further, and its remarkable feature is the successful application of HMM model and artificial neural network (ANN) in speech recognition. The wide application of the HMM model should be attributed to the efforts of scientists such as Rabiner of AT&TBell Labs. They have engineered the original HMM pure mathematical model to understand and understand more researchers, making statistical methods the mainstream of speech recognition technology. .

In the early 1990s, many famous big companies such as IBM, Apple, AT&T and NTT invested heavily in the practical research of speech recognition systems. Speech recognition technology has a good evaluation mechanism, which is the accuracy of recognition, and this indicator has been continuously improved in laboratory research in the mid-to-late 1990s. Some representative systems are: ViaVoice from IBM and NaturallySpeaking from DragonSystem, NuanceVoicePlatform voice platform from Nuance, Whisper from Microsoft, and VoiceTone from Sun.

According to the different electrolyte materials used in lithium-ion batteries, lithium-ion batteries are divided into liquid lithium-ion batteries (Liquified Lithium-Ion Battery, referred to as LIB) and polymer lithium-ion batteries (Polymer Lithium-Ion Battery, referred to as PLB) or plastic lithium Ion batteries (Plastic Lithium Ion Batteries, referred to as PLB). The positive and negative materials used in polymer lithium-ion batteries are the same as liquid lithium ions. The positive electrode materials are divided into lithium cobalt oxide, lithium manganate, ternary materials and lithium iron phosphate materials. The negative electrode is graphite, and the working principle of the battery is also basic. Unanimous. The main difference between them lies in the difference in electrolytes. Liquid lithium-ion batteries use liquid electrolytes, while polymer lithium-ion batteries are replaced by solid polymer electrolytes. This polymer can be "dry" or "colloidal." of. Polymer lithium ion batteries have the characteristics of good safety performance, light weight, large capacity and low internal resistance.

Polymer Lithium Ion Battery,Durable Lifepo4 Dadn72V 45Ah,High Performance Batteries For Electric Motorcycles,High Performance 72V Batteries

Shandong Huachuang Times Optoelectronics Technology Co., Ltd. , https://www.dadncell.com