Brief introduction of the international video coding standard mpeg

Since the 1990s, ITU-T and ISO have formulated a series of audio and video coding technology standards (source coding technology standards) and recommendations. The formulation of these standards and recommendations has greatly promoted the practicality and industrialization of multimedia technologies. From the perspective of technological progress, the compression capacity of the first-generation source coding technology standards MPEG-1 and MPEG-2 completed in 1994 was 50-75 times. Since the beginning of the new century, the second-generation source coding technology standards have been introduced one after another, and the compression efficiency can reach 100-150 times. The second-generation source coding technology standards will reshuffle the pattern of the international digital TV and digital audio and video industry that has just formed.

There are two major series of international audio and video codec standards: MPEG series standards formulated by ISO / IEC JTC1, MPEG series standards adopted by digital TV; H.26x series video coding standards and G.7 series formulated by ITU for multimedia communications Audio coding standard.

CCITT (International Telegraph and Telephone Advisory Committee, now incorporated into the International Telecommunication Union ITU) has proposed a series of audio coding algorithms and international standards since 1984. In 1984, CCITT Study Group 15 established an expert group to study the encoding of TV phones. After more than 5 years of research and efforts, CCITT Recommendation H.261 was completed and approved in December 1990. On the basis of H.261, ITU-T completed the H.263 coding standard in 1996. On the basis of little increase in the complexity of the coding algorithm, H.263 can provide better image quality and lower rate. , H.263 encoding is the most used encoding method for IP video communications. H.263 + introduced by ITU-T in 1998 is the second version of the H.263 recommendation. It provides 12 new negotiable modes and other features to further improve the compression coding performance.

MPEG is the abbreviation of the Moving Picture Expert Group (ISO / IEC JTC1) established in 1988 by the International Organization for Standardization and the International Electrotechnical Commission ’s First Joint Technical Group (ISO / IEC JTC1). The working group (ISO / IEC JTC1 / SC29 / WG11) is responsible for the formulation of international technical standards for compression, decompression, processing, and presentation of digital video, audio, and other media. Since 1988, the MPEG expert group has held about four international conferences each year. The main content is to formulate, revise and develop MPEG series multimedia standards. Video and audio coding standards MPEG-1 (1992) and MPEG-2 (1994), multimedia coding standard based on audiovisual media objects MPEG-4 (1999), multimedia content description standard MPEG-7 (2001), multimedia frame standard MPEG- twenty one. At present, the MPEG series of international standards has become the most influential multimedia technology standard, which has a far-reaching impact on important products in the information industry such as digital TV, audio-visual consumer electronics, and multimedia communications.

The CCITT H.261 standard began in 1984 and was actually completed in 1989. It is the pioneer of MPEG. MPEG-1 and H.261 have the same data structure, coding tools and syntax elements, but the two are not fully backward compatible. MPEG-1 can be regarded as an extended set of H.261. The development of MPEG-1 began in 1988 and was actually completed in 1992. MPEG-2 can be regarded as an extended set of MPEG-1, which began in 1990 and was actually completed in 1994. H.263 began in 1992 and the first edition was completed in 1995. MPEG-4 (its video part is based on MPEG-2 and H.263) began in 1993, and the first version was actually completed in 1998.

The standards that the MPEG Expert Group has and are developing include:

(1) MPEG-1 standard: officially became an international standard in November 1992, with the name "Compression coding for moving images and accompanying sounds with a digital storage media rate of 1.5 Mbps". The video parameters supported by MPEG-1 are 352 X 240 X 30 frames per second or equivalent.

(2) MPEG-2: became an international standard (ISO / IEC13818) in November 1994. This is a widely adaptable dynamic image and sound coding scheme. The initial goal was to compress the video and its audio signals to 10Mb / s. The experiment can be applied to the coding range of 1.5-60Mb / s, and even higher. MPEG-2 can be used for compression coding of digital communications, storage, broadcasting, high-definition television, etc. DVD and digital TV broadcasting use the MPEG-2 standard. After 1994, the MPEG-2 standard has been extended and revised.

The video coding technology in the MPEG standard The MPEG standard is mainly based on three major coding tools: adaptive block transform coding (AdapTIve block transform coding) to eliminate spatial redundancy; motion compensation differential pulse code modulation (MoTIon-compensated DPCM) to eliminate time-domain redundancy, The two are merged into hybrid coding technology (hybrid coding). Entropy coding is used to eliminate the statistical redundancy generated by the hybrid encoder. There are also some auxiliary tools as supplements to the main tools, used to eliminate the residual redundancy of some special parts of the encoded data, or to adjust the encoding according to the specific application, and some encoding tools support formatting the data into a specific bitstream to facilitate Storage and transmission.

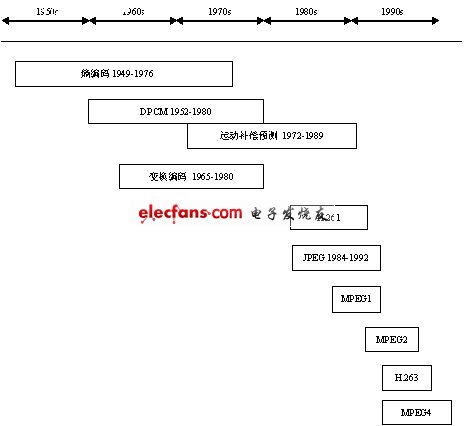

Modern entropy coding was founded in the late 1940s; it was applied to video coding in the late 1960s; then it was continuously improved. In the mid-1980s, two-dimensional variable-length coding (2D VLC) and arithmetic coding (arithmeTIc coding) methods were introduced.

DPCM was founded in 1952 and was first applied to video encoding in the same year. DPCM was originally developed as a spatial coding technology. In the mid-1970s, DPCM began to be used for time-domain coding. DPCM as a complete video coding scheme continued until the early 1980s. From the mid to early 1970s, the key elements of DPCM merged with transform coding technology, and gradually formed a hybrid coding technology, and developed into the prototype of MPEG in the early 1980s.

Transform coding was first used in video in the late 1960s, with substantial development in the first half of the 1970s, and is considered to achieve the highest resolution effect in spatial coding. In hybrid coding, transform coding is used to eliminate spatial redundancy, and DCPM is used to eliminate temporal redundancy. Motion compensation prediction technology greatly improves the performance of time-domain DCPM. It was founded in 1969 and developed into the basic form of MPEG in the early 1980s. In the early 1980s, interpolation coding (interpolaTIve coding) was extended, that is, prediction by multi-frame interpolation, and intermediate frames were predicted by scaled motion vectors. It was not until the late 1980s that bi-directional prediction technology (bi-directional prediction) was born, and this technology developed to its final form. In recent years (H.264), the prediction quality has been improved, that is, the correlation between different signals has decreased. Therefore, the necessity of transformation is reduced, and H.264 uses a simplified transformation (4x4).

The time correspondence between the AVS standard and relevant international standards and the work already carried out by the AVS working group are shown in the figure below.

Basic Principles of Video Compression The fundamental reason why video can be compressed is that video data has a high degree of redundancy. Compression refers to the elimination of redundancy, mainly based on two technologies: statistics and psycho-visual.

The basic basis for eliminating statistical redundancy is that the video digitization process uses a regular sampling process in time and space. The video picture is digitized into a regular array of pixels, the density of which is suitable for characterizing the highest spatial frequency of each point, and the vast majority of picture frames contain little or no details of this highest frequency. Similarly, the selected frame rate can characterize the fastest motion in the scene, and the ideal compression system only needs to describe the instantaneous motion necessary for the scene. In short, the ideal compression system can dynamically adapt to changes in video in time and space, and the amount of data required is much lower than the original data generated by digital sampling.

Psychological vision technology is mainly aimed at the limits of the human visual system. Human vision has limits in terms of contrast bandwidth, spatial bandwidth (especially color vision), and temporal bandwidth. Moreover, these limits are not independent of each other, and there is an upper limit to the overall visual system. For example, it is impossible for the human eye to perceive the high resolution of time and space at the same time. Obviously, there is no need to characterize the information that cannot be perceived, or that a certain degree of compression loss is not perceivable by the human visual system.

The video coding standard is not a single algorithm, but a set of coding tools. These tools combine to achieve a complete compression effect. The history of video compression can be traced back to the early 1950s. In the following 30 years, the main compression technology and tools gradually developed. In the early 1980s, video encoding technology took shape. Initially, each major tool was proposed as a complete solution for video coding. The main lines of technology developed in parallel. Eventually, the best performers were combined into a complete solution. The main contributor to the integration of the solution was the standardization organization Experts from various countries and organizations jointly completed the program integration work, or that the coding standard program was originally created by the standards committee. In addition, although some technologies have been proposed many years ago, due to the high cost of implementation, they were not practically applied at the time. It was not until the development of semiconductor technology in recent years that they met the requirements of real-time video processing.

Figure 2 Development of coding tools and standards (Cliff, 2002)

(3) MPEG-4: Noting the need for low-bandwidth applications and the rapid development of interactive graphics applications (synthesized content such as games) and interactive multimedia (WWW and other content distribution and access technologies), the MPEG Expert Group established MPEG-4 Working group to promote the integration of the above three areas. At the beginning of 1999, MPEG-4 (first edition), which defined the standard framework, became an international standard (ISO / IEC 14496-1), and the second edition, which provided various algorithms and tools, became an international standard (ISO / IEC 14496- 2), the third, fourth, and fifth editions are still under development.

(4) MPEG-7 and MPEG-21 standards: MPEG-7 is a content expression standard for multimedia information search, filtering, management and processing, which became an international standard in July 2001. The focus of MPEG-21 being formulated is the multimedia framework, which provides a basic system for all developed and developing standards related to the delivery of multimedia content.

Electric Hand Air Pump,Volleyball Air Pump,Portable Ball Air Pump,Ball Air Compressor

SHENZHEN SMARTNEWO TECHNOLOGY CO,. LTD , https://www.newopump.com