As the director of the Huawei Noah's Ark Laboratory, an adjunct professor of Peking University and Nanjing University, we can easily find that Li Hang has a scholar's spirit of exploration and Huawei's unique R&D temperament. At the GAIR conference held this afternoon on the “Artificial Intelligence Business Scene†agenda, Li Hang took the vision of “Intelligent Information Assistant†as an entry point, sharing one of the world’s top 500 companies and leading the Chinese science and technology community. Enterprises, Huawei's development and thinking in the field of artificial intelligence and deep learning.

First, Li Hang clarified the overview of the Noah's Ark Lab. He said that since the laboratory was founded more than four years ago, it has focused on research in cutting-edge scientific and technological fields such as artificial intelligence, machine learning, and data mining. At the same time, we also faced Huawei's three BG business groups focusing on the development of leading-edge products, such as smart communication networks, enterprise BG's big data applications, and consumer BG's intelligent voice assistants.

Secondly, he emphasized that one of the visions of Huawei's Noah's Ark Lab is to create a fully intelligent smart mobile handset terminal. Users will obtain all the information and assistance they want from the terminal through natural language.

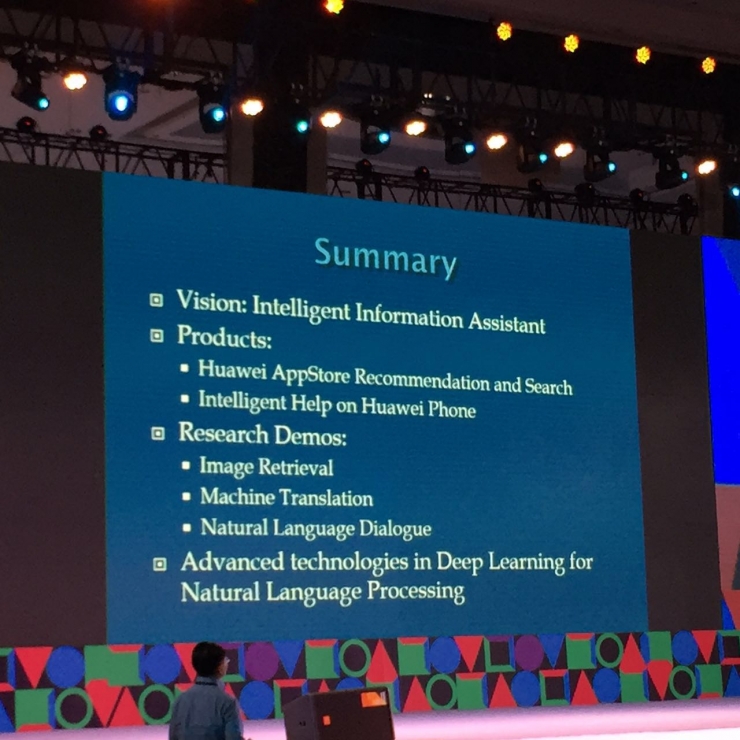

Then he took this vision as his goal and introduced two terminal software products currently developed at Noah’s Ark Lab and three intelligent information retrieval technologies.

The first product is the App market on Huawei mobile phones. He said that in the face of 300 million users, 30 million searches per day, and 100 million downloads of big data challenge, Noah's Ark Lab has been working together with Huawei Terminal to intelligently provide search results for customers and Recommended App.

The second product is Huawei's "mobile phone service." The product is an app on Huawei mobile phone. The user can use natural language to ask for help in the use of the mobile phone. In the help of the problem of 100,000 times daily, there are more than 90% of the users. You can get a satisfactory answer.

Followed by three intelligent search technology using deep learning algorithm.

Three technologiesThe first one is to retrieve classified photographs in natural language. This method does not use manual or machine learning to set a label for each photo in advance, and then processes the photos through labels. Instead, photos are processed using a deep learning model, which uses the content carried by the photos to produce a natural classification.

The second is neural machine translation.

The third is a neural response machine, an automated generative system. Li Hang said that this is the industry's first publicly-aware smart answering machine that can automatically generate replies, instead of using big data search matching.

According to Li Hang, the latter two retrieval techniques are based on sequence-to-sequence learning models. The sentences in the sentences to be translated and the neural response machines are regarded as sequence 1, and the target translation results and the questions in the neural response machine are replied to. Considered sequence 2. The so-called sequence-to-sequence refers to the existence of an intermediate-variable state between two sequences. This intermediate variable is matched with the optimal target by weighting and attention, and can effectively prevent repetition and omission. One of the industry's best depth models. The third neural response machine can output 76% of normal conversations and up to 95% of correct sentences. It needs to be emphasized: these are generated automatically.

Finally, Li Hang concluded again that Noah’s laboratory will focus on intelligent research and development of terminal products while conducting artificial intelligence research and future technological exploration.

The following is a speech record:

Hello everyone! Let me introduce Huawei, especially the research and technology development of Noah's Ark Lab in artificial intelligence. Focus on the application of this smart phone.

I will first introduce our vision on smart phones, introduce our related products and our research, and finally introduce our technology.

Noah's Ark Laboratory was established for 4 years. Professor Yang Qiang is the first person in our laboratory. Our research focuses mainly on artificial intelligence, machine learning, and data mining. Furthermore, we are now working on Huawei's three BG products. Research and development, probably we have 4 directions:

The first is a smart communication network. Everyone knows that communication equipment is a very important product of Huawei. The future communication equipment must be based on data mining, so we have done a lot of technical development in this area. On the other hand, big data, as well as our focus on Huawei's smart phones, mainly in semantic speech, recommend search technology in this area, to help our users better use mobile phones. Simply talk about our vision for the future. We use smart information data to summarize our vision. In the future, Huawei’s smartphones can communicate with users through language. They can help users overcome language barriers, help with translations, understand users’ needs, make recommendations for users, and help users manage information. Good help users get outside information.

Here are two products that we have built around Huawei mobile phones. Our Noah's Ark Lab has close cooperation with the departments of the terminal. We have developed several important products together, such as Huawei’s application market, and Huawei’s mobile phone users. Knowing Huawei's application market, we have application recommendations and searches. This recommendation and search algorithm was developed by our colleagues at Huawei's Ark Labs and Terminal's product line. The challenge is a big data challenge. There are 300 million registered users. Every day, 30 million users visit our market. There are 100 million users downloaded each day. How can this help users find their applications quickly? This is more challenging. The problem, we all know that search and recommendation are all very challenging in the context of big data. How can we update the model from time to time, can better meet the needs of users, and now use the latest technology in the industry as a recommendation? And search.

In addition, if we do all Huawei phones, we can look at this part of the mobile phone service. We have smart questions and answers. How do you use Huawei's mobile phones better? Ask questions in natural language, such as how to back up your phone. We can find the answers. Some of them are the answers found in our technical manuals. The accuracy rate can reach 90%. We can provide users with better help. We do not need to go online to search for Huawei's use.

Now I will make several demonstrations. Our Noah's Ark Lab is developing products together with the product department. On the other hand, we will do some research and development in the future, especially deep learning. We have done some work. I will now demonstrate three things. Demonstration: The first one is the image search. Suppose you are a mobile phone user. You can input your question on the mobile phone by voice or pinyin. For example, you can see the photos of the clouds on the plane. Now this scene is 20,000 pictures can be searched in natural language. These pictures do not have any image processing. For example, pictures of hot pots or pictures of climbing can be automatically found in natural language. Now on mobile phones, There are thousands of photos, how to manage the photos, this is a very useful application, and we are now doing technical development in this area.

The next one is doing machine translation, especially deep learning. Everyone is called neuro-technical translator. This piece also developed industry-leading technology. Because of the time, I will not let go.

This demonstration is a neuro-responsive machine. This is a one-wheeled natural language dialogue based on deep learning. This is the first one we developed in the industry. The generated self-dialogue system can automatically generate answers. It is not Like traditional question answering systems, we have a lot of data, 440,000 data to build such a system, this system was published earlier than other products developed by Google, and this paper was published at the top conference of ACL. . I introduce the contents of it. For example, if you enter a sentence, we can visit you at Noah's Ark Lab. We can give you a look at the time. The day before yesterday, our President of Huawei came to Noah’s Ark Lab to visit us. Show this, for example, I want to buy a Samsung mobile phone, this system will say that it still supports domestically made. For example, if you say that accounting is finally over, the system will say whether the next one is an antler's beak, and it is very interesting to you. Imagined answer.

Let's take a look at this technology. We are doing technical development around the application scenarios of the terminal. This section lists the technical research we mainly do, including question and answer, recommendation, speech recognition, dialogue, translation, pictures, and retrieval. Deep learning means that we have done a series of leading jobs in the industry, especially deep learning. This section introduces some representative work. The first one is MulimodalCNN. The first one is a demonstration of image search. When you say a word, you find the relevant picture. We now have 20,000 pictures. Each picture has about 3 sentences, for example, a child is swimming in a photo. With 150,000 pairs of data, we can train such a model. We all know that volume neural network is a more representative network. The left side can extract this image. This CNN has multiple layers and can be extracted from the image. Inside the outlines and objects, and the other is to extract features from the text, intuitively the characteristics of the words and phrases, you say this picture of children, this child may be extracted as a feature, in this photo there is exactly A child, this child will be extracted as an object, through a lot of learning can do just to see the effect, this can look at the results of our experiments, this is the English 30K data, we did a comparative experiment, We have compared the results of the methods with other sectors of the industry and we can see that the Noah’s Ark Lab proposed Just now, MulimodalCNN can achieve the best results in terms of search. Some models are not necessarily fair. Everyone can achieve better levels for the so-called Exprimental. This work took our paper at the conference of image recognition last year. .

In the next work we introduced, we looked at machine translation and dialogue where we used sequence-to-sequence learning. The earliest models were proposed by Google and the University of Montreal. We improved it and used it for dialogue and translation. Very good results. What is a good way to learn from the sequence to the sequence? We asked which of the deep learning tools in natural language has brought us the most revolutionary change. Let me talk about the sequence to the sequence of learning. The basic idea is this, use translation as an example, and now take a Chinese sentence. Then, a cat sits on the mat "A cat sit a cushion", a sequence-sequence model, a word from left to right to see our Chinese, convert it into a semantic representation, this is a Vector, the HE, HT-E, HT we see now is the semantic representation of this cat sitting on a mat. We call the code, translate this to the target language, and translate it into English. This T-1, Say that English produces the corresponding semantic representation of such a sentence at each position. What we translate to do is to make this original, Chinese code, represent the middle representation, and then convert from the middle representation to another. An intermediate representation is a decoding that converts it into an English sentence. The middle line of C is called the attention model. Attention is to help. Let's go and choose. When I produce a word in English, I want to translate to produce English words one by one. I have to decide selectively. When I am in any position, I have to decide to produce a decoded representation. It's better to choose which of the Chinese language is better. This C actually makes a balance. I'm going to re-judge each position. I'm going to produce an English word when the corresponding Chinese is good. Intuitively speaking, this is an explanation. We can use this model to be actually quite complex. Through this model, you can give me a sequence of any word. I can generate additional sequences. The data is in Chinese and English. I can produce translations. The effect of this model is very good.

The neural response machine we just demonstrated is actually a model of sequence-to-sequence learning. At this time, we have a little different from the Chinese to Chinese sentence. However, unlike the translation, the translation is in two different languages ​​but the semantics. It is the same two or two sentences that form the same round of dialogue with a single language. Our core idea is to use the mechanism of attention, but we have a global mechanism. Intuitively explaining this C actually means that I have read this The overall semantics of the sentence is a vector of 10 values. The result of encoding at each position is to get the semantics of each position. The semantics of the two are combined to become the semantics of the line of intermediate C. , and then convert it into a semantic representation of the corresponding words, and then finally decode it into a sentence. If we have 4,000,000 corresponding data in this model, we can map the model better and we can do this conversion. We see that the correct sentence is about 95%, and about 76% of the respondents are able to form a natural dialogue. This is the example I just gave.

We can now use it in machine translation. The mechanism we use for machine translation is that we say that sequence is very powerful for sequences, but we can do it better. If we use traditional sequences for sequence models, then we will use the original Something has been missed, or it has been translated many times. We have a mechanism here. When translating something, I don’t have to turn over and which do not, and this mechanism can make it stronger. I want to produce English. The following is a difference between a real word and an action word. If it is a Chinese word, if it produces a real word, cat, I would like to see which word influences me. If the two are combined, it will produce good results. The effect was that we published a paper at the top ACL of this week. One of them was to solve the problem of missed translation or over-translation.

Noah's Ark Lab is doing product development for mobile phones and doing research on image retrieval and other technical aspects. Here, thank you!

Low Speed and Middle Speed Generators with Low speed engine or middle speed engine

. Low speed diesel generators, 500rpm, 600rpm

. Middle speed diesel generators, 720rpm, 750rpm, 900rpm, 1000rpm, 1200rpm

. Voltage: 3kV, 3.3kV, 6kV, 6.3kV, 6.6kV, 10kV, 10.5kV, 11kV,13.8kV

. Frequency: 50Hz, 60Hz

Low Speed Generator,Middle Speed Generator,1000Rpm Diesel Generator,500Rpm Diesel Generator

Guangdong Superwatt Power Equipment Co., Ltd , https://www.swtgenset.com